We recommend a more collaborative approach to service request management. Typical tiered support teams are highly structured and manage requests via escalations. To deliver better customer service, it’s important to focus on the well-being and development of frontline support teams. IT shouldn’t be thought of as a bottleneck. Meanwhile, customers complain that IT is difficult to work with, unresponsive, and takes too long to fulfill the requests they need to do their job. IT service teams in large corporations are constantly responding to requests from the business, often falling into the mode of reacting first to the customers who make the most noise. Requests for services often exceed the supply of available time and resources. The unsung heroes of any organization, support teams understandably get burnt out by the sheer volume of tickets they handle.

#Request queue meaning software

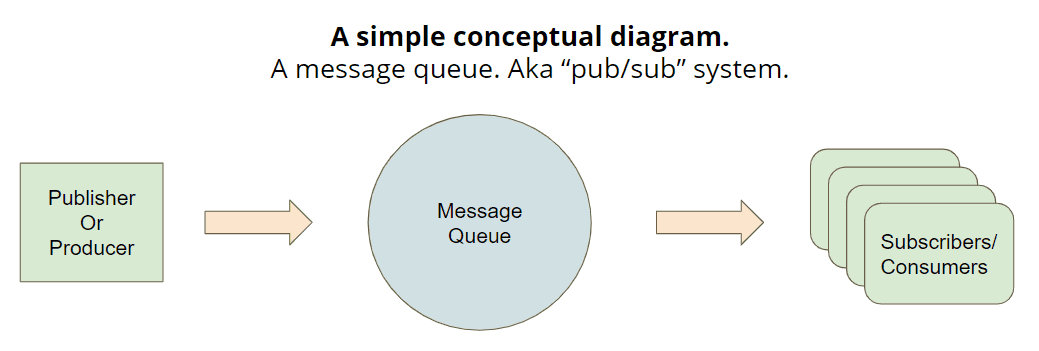

For instance, if a new employee submits a service request for access to a software application, that request can be pre-approved and automatically granted.Īll of this means that the IT team can reduce stress, save time, and avoid overly complicated workflows. Considering the variety of incoming change, incident, and service requests you have to handle, separate workstreams and records will allow your team to figure out how best to allocate your resources. Service requests are quite often low risk, and can be expedited or even automated. Service requests should be handled as a distinct workstream to help IT teams focus on delivering more valuable work and better enabling the rest of the organization.

#Request queue meaning upgrade

Example: “I need to upgrade the database!”

#Request queue meaning how to

Please try again later." The documentation for PassengerMaxRequestQueueSize tells you how to do that. Something like, "We're sorry, a lot of people are visiting us right now. If you cannot prevent requests from being queued, then the next best thing to do is to keep the queue short, and to display a friendly error message upon reaching the queue limit. by increasing the number of processes or threads. Increasing your app's concurrency settings (if your workload is I/O bound), e.g.Upgrading to faster hardware (if your workload is CPU bound).Making your app faster (if your workload is CPU bound).You should ensure that requests are queued as little as possible. It limits the impact of the above situation. So having a limit on the queue is a good thing. This causes them to click reload, making the queue even longer (their previous request will stay in the queue the OS does not know that they've disconnected until it tries to send data back to the visitor), or causes them to leave in frustration. But this comes with a drawback: during busy times, the larger the queue, the longer your visitors have to wait before they see a response. Queuing is usually is bad: it often means that your server cannot handle the requests quickly enough.Ī larger queue means that requests are less likely to be dropped. Any requests that aren't immediately handled by an application process, are queued.

0 kommentar(er)

0 kommentar(er)